Decoding the Decoder: A Deep Dive into PyTorch’s TransformerDecoderLayer

The TransformerDecoderLayer is a fundamental building block within PyTorch’s implementation of the Transformer model, crucial for sequence-to-sequence tasks like machine translation, text summarization, and question answering. This layer refines the target sequence representation by incorporating information from the encoder and previous decoder steps.

How the Decoder Works

Within a TransformerDecoder, multiple TransformerDecoderLayer instances work together, each refining the output sequence progressively. Each layer receives input from the encoder (contextualized representation of the source sequence) and the output of the previous decoder layer. This iterative refinement allows the decoder to generate the target sequence word by word, considering both the source text and the already generated portion of the target sequence.

Key Parameters and Their Impact

The TransformerDecoderLayer‘s behavior is controlled by several key parameters:

| Parameter | Description |

|---|---|

d_model |

Dimensionality of the model’s internal representations (embedding size). |

nhead |

Number of attention heads, enabling parallel processing of different aspects of the sequence. |

dim_feedforward |

Size of the hidden layer in the feed-forward network, influencing the complexity of transformations. |

dropout |

Regularization technique to prevent overfitting. |

activation |

Activation function used in the feed-forward network (e.g., ReLU, GELU). |

layer_norm_eps |

Small value added to layer normalization to avoid division by zero. |

batch_first |

If True, input tensors are expected in the shape (batch_size, sequence_length, d_model). |

norm_first |

Apply layer normalization before or after attention and feed-forward operations. |

bias |

Use bias terms in linear layers |

Tuning these parameters is crucial for optimal performance, and experimentation is often necessary. The ideal configuration depends on factors like dataset size, complexity, and the specific NLP task.

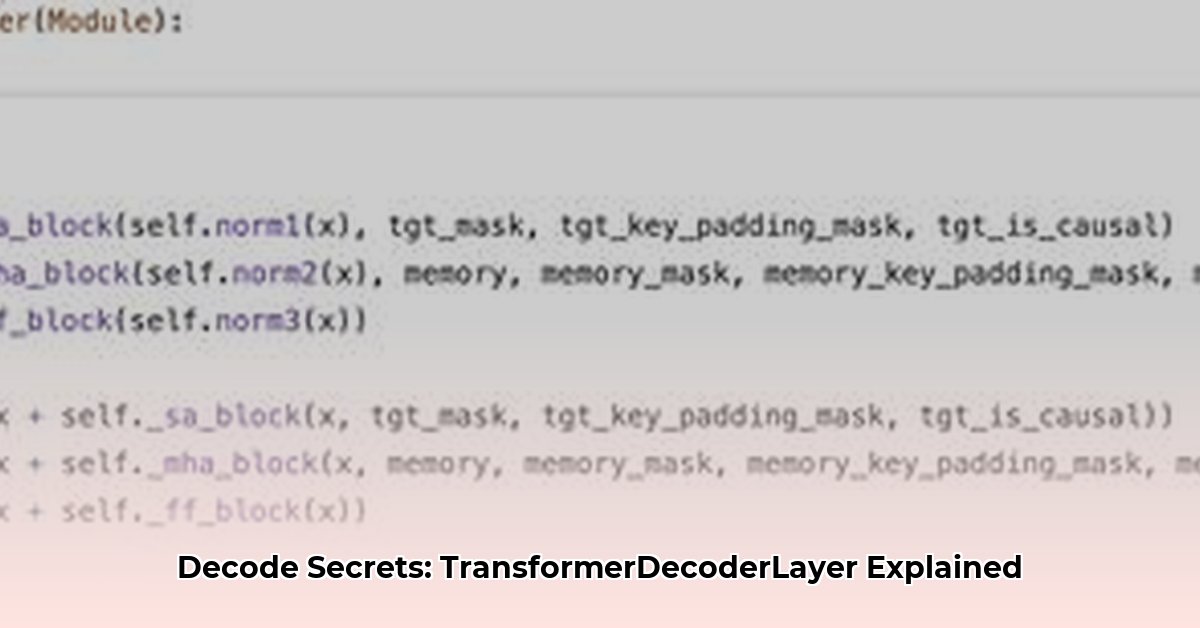

Inside the TransformerDecoderLayer: A Step-by-Step Breakdown

Each TransformerDecoderLayer performs the following operations:

-

Masked Multi-Head Self-Attention: The decoder attends to the previously generated output tokens, using a mask to prevent “peeking” ahead at future tokens. This ensures predictions are based only on preceding context.

-

Multi-Head Cross-Attention (Encoder-Decoder Attention): The decoder attends to the encoder’s output, incorporating context from the source sequence into the generation process.

-

Feed-Forward Network: A position-wise feed-forward network further processes the combined information from the attention mechanisms.

-

Residual Connections and Layer Normalization: These components help stabilize training, prevent vanishing gradients, and enhance performance. Research suggests they are crucial for training deep networks effectively.

These steps are often repeated multiple times within a single layer, enabling deeper processing of the information.

Implementing the TransformerDecoderLayer in PyTorch

Building a Decoder Layer

import torch.nn as nn

# Example parameters (adjust as needed)

d_model = 512

nhead = 8

dim_feedforward = 2048

dropout = 0.1

activation = "relu"

layer_norm_eps = 1e-5

batch_first = True

decoder_layer = nn.TransformerDecoderLayer(d_model, nhead, dim_feedforward, dropout, activation, layer_norm_eps, batch_first)

# Example input (adjust as needed)

tgt = torch.randn(10, 32, d_model) # (batch_size, sequence_length, d_model)

memory = torch.randn(10, 16, d_model) # (batch_size, sequence_length, d_model)

# Pass data through the decoder layer

output = decoder_layer(tgt, memory)

Masking for Variable-Length Sequences

Masking handles variable-length sequences by preventing the model from attending to padding tokens. tgt_mask prevents peeking ahead in the target sequence, while tgt_key_padding_mask and memory_key_padding_mask handle padding in the target and memory sequences, respectively.

# Example masking (adjust as needed)

tgt_mask = nn.Transformer.generate_square_subsequent_mask(sz=tgt.size(1)).to(tgt.device) #create tgt mask for subsequent positions to avoid peeking ahead during training or generation.

tgt_key_padding_mask = torch.zeros_like(tgt[:,:,0]).to(torch.bool) # Set to True for padded positions

memory_key_padding_mask = torch.zeros_like(memory[:,:,0]).to(torch.bool) # Set to True for padded positions in the memory tensor.

output = decoder_layer(tgt, memory, tgt_mask=tgt_mask,

tgt_key_padding_mask=tgt_key_padding_mask,

memory_key_padding_mask=memory_key_padding_mask)

You can find more information and examples in the official PyTorch documentation.

Integrating into a Complete Transformer Model

The TransformerDecoderLayer is typically integrated into a TransformerDecoder, which is then combined with a TransformerEncoder and embedding layers to form a complete Transformer model. You can also explore different activation functions, attention head numbers, and custom masking strategies. While the basic principles remain the same, ongoing research may lead to further refinements and new techniques in the future. By understanding the inner workings of the TransformerDecoderLayer, you’re well-equipped to leverage its capabilities for various sequence-to-sequence tasks.

- How To Make Free Electricity At Home With Renewable Power - February 14, 2026

- 8 Ways of Generating Electricity at Home for Off-Grid Living - February 13, 2026

- How To Make Free Electricity To Run Your Home With Renewable Sources - February 12, 2026